Recently I’ve been developing a Python ocpp-asgi -library that enables implementing event-based, serverless support for Open Charge Point Protocol (OCPP). In this blog post I describe the motivation and architecture options that inspired me to start this work to overcome the limitations set by existing and nice ocpp -library. Before even starting laborous (but fun) task of developing a new open source library, it’s important to understand what benefits the library will unlock e.g. regarding the available architecture options.

Baseline system

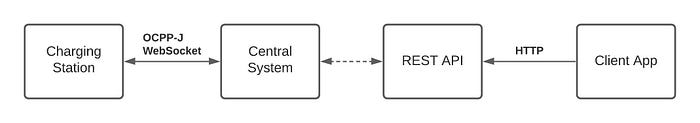

OCPP protocol is about transferring specified JSON messages between client (Charging Station) and server (Central System) over WebSocket connection. Architecture for a baseline OCPP system would look like this:

Pros:

- System architecture looks really straightforward.

Cons:

- This is not a real architecture nor even able to completely support OCPP specification’s requirements.

User interactions

OCPP system where only Charging Stations and Central System can communicate with each other wouldn’t be very useful. Users need to be able to interact with Charging Stations e.g. to remotely start and stop a charging session. To facilitate this, we must add an interface that users can communicate and which in turn will communicate with specific Charging Station. Let’s say this interface is a REST API (there are of course other options as well) and it’s somehow coupled with Central System. This is still not a real architecture that can be implemented but a sort of concept view. Now our OCPP system architecture looks as follows:

Pros:

- System architecture doesn’t still have that much complexity.

Cons:

- This is still not a real architecture as we haven’t considered real services for implementation.

AWS Services

Let’s proceed forward and start drafting more concrete architecture i.e. what AWS services could be utilized to ensure security, availability and scalability as well as cost efficiency. We must also consider what kind of software components we need/can develop. As a Serverless and Python fans we know that it’s simple to run REST API within AWS Lambda using ASGI compatible FastAPI framework. Unfortunately, ocpp-library available for Python requires direct handle to WebSocket instance so we can’t run that as Serverless. AWS has number of services that support WebSockets e.g. API Gateway for WebSockets and Elastic Application Load Balancer so we must analyze their feature set and pick the one that is suitable for our architecture.

There are few requirements coming from OCPP specification as well as set by the existing ocpp-library that we must take into account. We require support for:

- WebSocket protocol

- Direct handle for WebSocket connection handle (i.e. connect/disconnect event’s and “send” and “recv” handles. This requirement comes from the ocpp-library.

- Mutual TLS authentication with client certificate inspection.

- TLS with server certificate with client basic authentication.

- Path parameter style unique WebSocket endpoint URLs for each Charging Station’s connection (e.g. wss://<baseurl>/<charging-station-unique-id>).

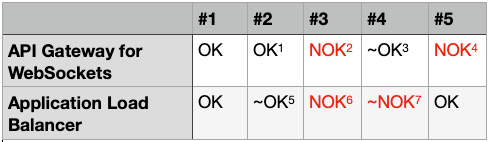

The following table shows the breakdown how well requirements are met:

Here are more details:

- Acts as a proxy and reverse API for WebSocket connections thus allows integrating services (e.g. AWS Lambda) that will process incoming messages in an event-based manner as an invocation payload. Integration response is then delivered back to client. This is what API Gateway for WebSockets is supposed to do but unfortunately due existing ocpp-library this doesn’t meet our requirements.

- API Gateway REST APIs support Mutual TLS. In that case client’s certificate may be inspected after initial authentication further in Lambda authorizer. API Gateway for WebSockets doesn’t yet support this.

- Supports TLS termination and has capability to add custom authorization using Lambda. But unknown whether this works together with mutual TLS.

- At the time there wasn’t support for path parameter style unique endpoint URLs.

- ALB support for WebSockets means that it’s possible to connect with ws/wss and route traffic to specific target groups with stickiness enabled. So basically this means that one still needs to implement websocket server itself and ALB can be used for load balancing.

- Supports TLS termination. Client certificate will be stripped off during TLS termination.

- Supports TLS termination. Supports client authentication with few ways (e.g. OIDC compliant IdP or Cognito user pool through social IdPs or corporate identities). As we’re not authentication users here, this approach didn’t exactly fit, although might be doable.

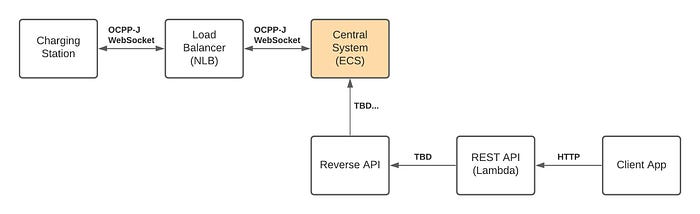

We end up running Central System as multiple Tasks within Elastic Container Service (ECS) and route traffic via Network Load Balancer so that we are able to support requirements #3 and #4.

Pros:

- System architecture looks a little more complicated but nothing that we couldn’t handle.

Cons:

- We don’t yet have an idea how to implement Reverse API and how it communicates towards Central System.

- We must come up with a strategy on how to scale Central System instances within ECS. Also, there are challenges related to deployment due WebSocket connections that are maintained within each Central System instance.

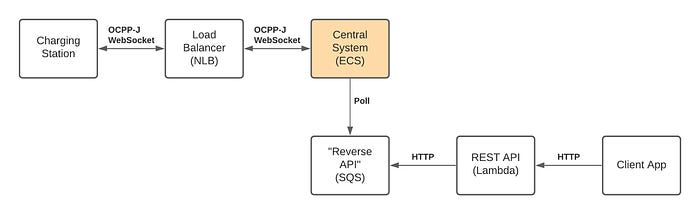

Reverse API

Term “Reverse API” is derived from the term “Reverse Proxy” and it means a component, which is able to initiate communication from within backend system towards a specific client via WebSocket connection. The tricky thing here is that as we’re running multiple Central System instances within container service, we don’t have an obvious way to know which instance “holds” particular WebSocket connection. There are multiple options how this problem can be solved. We assume that need for “reverse” communication is not that frequent so using SQS might be just fine. This is not a even a close to perfect solution but let’s accept the risks and for the sake of architecture say it will be sufficient.

This could very well be how the final architecture’s key pieces fit together. Of course, full system will require several other services as well.

Pros:

- We have all pieces in place for implementing OCPP system.

Cons:

- System architecture looks a little more complicated but nothing that we couldn’t handle.

- Reverse API solution is not an ideal for very frequent “reverse” communication.

- We must come up with a strategy how to scale Central System instances within ECS.

- There are challenges related to deployment due WebSocket connections that are maintained within each Central System instance.

Identifying performance bottlenecks

The next iteration is a lesson learnt from the real implementation. When executing load testing against our system we noticed two performance (CPU) bottlenecks: synchronous database operations and WebSocket TLS overhead. Exact percentages for those vary depending on the use case but looking at the profiler figures 50% and 40% respectively are not that far from the truth.

In general, we’ve been using asynchronous programming model throughout the system but for SQLAlchemy ORM-library the async API wasn’t available at the time we started development. Performance-wise it’s extremely unfortunate to block the event loop while just waiting database operations to finish.

WebSocket TLS overhead is yet another thing as it’s about real processing work. How much this is about particular WebSocket library efficiency and e.g. OpenSSL is hard to say.

Due to these two performance bottlenecks, the system can’t quite handle the maximum load. There’s also a third problem, which is a consequence of a combination of these two. When pushing ECS tasks’ CPU usage too high, they fail to respond to load balancer’s target group health checks, which causes ECS task to be flagged as unhealthy. This starts a domino effect where WebSocket connections get re-established and then next ECS task will start having problems.

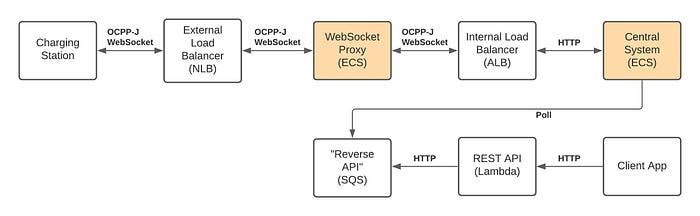

One idea to proceed from here is to separate WebSocket TLS overhead from OCPP business logic handling and then we can focus on dealing with these two different kind of performance bottlenecks separately. System’s architecture will evolve to following:

Pros:

- Better security posture due more layered design.

- TLS overhead as well as authentication is moved out of Central System. We could implement WebSocket proxy’s WebSocket server in different language (e.g. C++) to gain performance benefits.

- We can have a different scaling strategy for WebSocket Proxy and Central System instances within ECS.

Cons:

- System architecture looks way more complicated but still nothing very unusual.

- Reverse API solution is not an ideal for very frequent “reverse” communication.

- We must come up with a strategy how to scale both WebSocket Proxy and Central System instances within ECS.

- There are challenges related to deployment due WebSocket connections that are maintained with each Central System instance.

Towards serverless

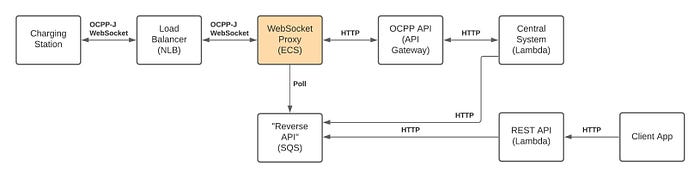

Now we have separated WebSocket management and TLS overhead to a dedicated component. To deal with Central System performance bottlenecks, we can proceed to replace ECS with Lambda and Application Load Balancer with API Gateway. Of course, there’s one practical problem: existing ocpp-library doesn’t support this event-based and serverless model. Nevertheless, the architecture in this case would be the following:

Pros:

- Better security posture as well due more layered design.

- TLS overhead as well as authentication is moved out of Central System. We could implement WebSocket proxy’s WebSocket server in different language (e.g. C++) to gain performance benefits.

- We can now deploy OCPP business logic related changes to Central System without affecting WebSocket connections.

- Cost savings as we’re billed only when event handling logic is executed in Lambda instead of previous situation when ECS based billing is running no matter the load.

Cons:

- System architecture looks still almost as complicated as the previous version, but we’ve managed to eliminate one system, which scaling we need to deal with.

- Reverse API solution is not an ideal for very frequent “reverse” communication.

- We still must come up with a strategy how to scale WebSocket proxy.

- Existing ocpp-library doesn’t support this model. So until there’s a library that supports event-based and serverless model this is not a feasible architecture.

Fully serverless

API Gateway for WebSockets works exactly as our pictured WebSocket Proxy. The system architecture would be the following:

Pros:

- System architecture looks really straightforward.

- WebSocket connection management and TLS overhead is not our headache anymore.

- We can now focus solely on OCPP business logic.

- We’ll have extremely scalable and cost-efficient system without sacrificing security.

Cons:

- Existing ocpp-library doesn’t support this model. So until there’s a library that supports event-based and serverless model this is not a feasible architecture.

- API Gateway for WebSockets doesn’t support path parameter style unique endpoint URLs.

- This will likely put a lot of stress for downstream services e.g. Database. So we don’t exactly know what kind of problems we’ll need to deal with.

Conclusion

As I’ve described evolution from baseline to fully serverless system it’s hopefully clear why event-based serverless model really has big advantages. Exactly that was the motivation and reason for me take the chance and try to transform existing ocpp-library to support this model.

WebSocket is a great protocol and from the client perspective simple and efficient. The downside is that it might introduce some tricky problems on the backend side. Preferrably much of this complexity can be handled by cloud provider’s services and developers could focus on business logic. AWS API Gateway is a great service and I hope it’s support for WebSockets will get much love and attention in the future.

My ocpp-asgi library is still in it’s alpha stage. As the domain is quite niche the time will tell if there’s a enough demand for developing it mature enough for production use.