Introducing Paita: Python AI Textual Assistant

Interact with multiple AI Services and models right from your terminal

Paita is a chat interface on your terminal that you can use to interact with different AI services and models. It works on virtually all terminals in macOS, Linux and Windows and requires Python 3.8 or newer to be available. You can install Paita from pypi:

> pip install paitaOnce installed you need to configure access to your chosen AI service. Currently AWS Bedrock and OpenAI are supported. OpenAI requires api key in an environment variable:

> export OPENAI_API_KEY=<openai-api-key>Then you can just launch paita:

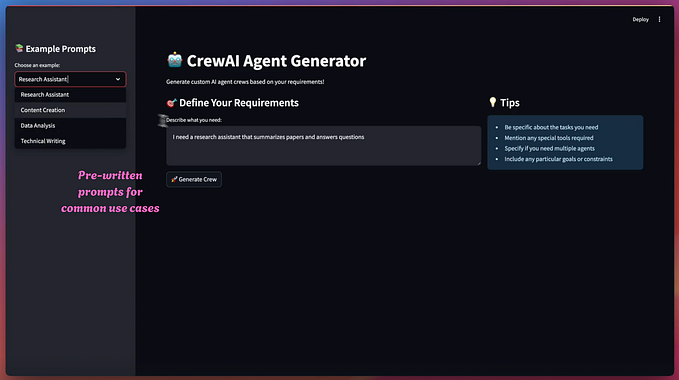

> paitaWhile Paita starts it will load all the available AI Services’ models and prompts you to select, which model you want to use. Paita even works on various cloud consoles like AWS’s CloudShell:

Paita is based on awesome Textual framework and uses LangChain to interact with AI models. For quite some time, I had been looking for a chance to use Textual on some project. But it wasn’t until I started to work with AI models and realized that there might be a good opportunity to build something useful.

Currently, Paita is still in its very initial phase but it has already proven to be quite a handy tool. I use it instead of ChatGPT’s web interface and when working with AWS Bedrock chat models. It’s interesting to be able to easily switch back and forth between different services’ models and compare their output to the same questions.

Give Paita a try and let me know what you think!