How Do You Like Your AWS Lambda Concurrency? — Part 3: Go and Rust

Developer Experience Review and Performance Benchmark of Python, Node.js, Go, Rust, Java, and C++ in AWS Lambda

In this five-part blog series, we explore how much speed improvement can be achieved when a simple sequential logic is converted to a concurrent implementation. We review the developer experience on implementing the same concurrent logic using Python, Node.js, Go, Rust, Java and C++ and benchmark each implementation in AWS Lambda with different resource configurations.

You can jump directly to the different articles in this series:

- Part 1 — Introduction

- Part 2 — Python and Node.js

- Part 3 — Go and Rust

- Part 4 — Java and C++

- Part 5 — Summary

In this third post of the series, we focus on two modern and popular statically typed, compiled languages: Go and Rust. Article follows the same structure as the previous one with Python and Node.js. This time though as code size grows we only highlight the most important parts from the implementation.

Go Implementation

Both Python and Node.js are single threaded implementations using asynchronous programming models. This time with Go we are starting to benefit from multiprocessing runtime architectures.

Go’s paradigm for enabling concurrency/parallelism is based on the use of goroutines and channels. Go runtime scheduler will take care scheduling goroutines across multiple CPUs if such are available in runtime.

As with Python and Node.js we want to be able to run code locally during the development. For this we need to organize files in our project in such a way that we can have one main-package with main-function as an entry point for local development and then another as entry point in Lambda runtime environment.

To start with we have Go’s main entrypoint from cmd/main.go file:

In entrypoint’s main-function, we need to call ‘lambda.Start’ function, which takes our ‘handler’ function as an argument.

Here’s Go’s ‘handler’ function from internal/handler.go file:

Here, we call the ‘processor’ function, and afterward, we manage Go’s typical function response and error scenario. We have implemented struct-types for both Event and Response.

Go’s ‘processor’ function from internal/handler.go file is already a lengthy one so we’ll cover only the part that’s interesting from the concurrent logic perspective.

We create a response channel that contains string pointers as well as an instance of WaitGroup synchronization primitive. We increment the wait group’s counter for each S3 object key, and create a goroutine using the ‘go’ keyword to execute the ‘get’ function. We push the result body to the response channel. Then we have yet another goroutine, which waits until all goroutines are completed and then closes the response channel. Function continues from here to collect the responses from the channel.

Finally let’s see Go’s ‘get’ function:

As we’re executing ‘get’ function using goroutine there’s nothing that implies concurrency or parallelism in the implementation.

Rust Implementation

Developing with Rust was a new experience for me, something I had been interested in trying for some time.

I chose to use the async/await approach with Rust as that’s what AWS SDK for Rust examples advise you to do. You can also achieve concurrency or parallelism by working directly with threads and channels.

In Rust’s case we make an exception to the approach that it should be possible to execute handler-function also locally. Instead we rely on the cargo-lambda-tool’s ability to invoke code as it would be a local Lambda-function. This eliminates the need for restructuring code in a way that enables building and running two different binaries from a project structure.

To start with we have Rust’s main entrypoint from cmd/main.go file:

In Rust, when working with async/await, you need to use an asynchronous runtime like Tokio. The entrypoint of the program must be marked with #[tokio::main] macro and then inside that macro asynchronous code using async/await can be implemented.

Here’s Rust’s ‘handler’ function:

We invoke the async ‘processor’ function with the .await? syntax. Calculating and formatting elapsed time takes some time to understand and ingest for the one not previously familiar with the language.

From Rust’s ‘processor’ function we only cover the part that’s interesting from the concurrent logic perspective.

First we initialize the structure managing concurrently executed futures. Next we need to deal with Rust’s ownership paradigm and clone variables we’re using inside async move-block. As the last step within the for-loop we push the asynchronous closure to a list of futures. From here code proceeds to trigger the execution of tasks and collects the tasks as well as processes the results.

Finally we have Rust’s ‘get’ function in its entirety:

In ‘get’ function we see Rust’s typical method chaining-paradigm in effect. Reading a body and converting the body to a string-type needs a couple of intermediate stages.

Building Deployment Packages

Now that we’re working with compiled languages, we must ensure that we target the right processor architecture for our code to run. AWS Lambda Go-runtime currently supports only Intel/AMD architecture so that makes it an exception in this evaluation. With the other languages, we utilize the ARM runtime. There’s no specific runtime for Rust, but AWS Lambda supports container image type of deployment package.

For the Go deployment package we use the following Dockerfile to create a build medium.

FROM public.ecr.aws/sam/build-go1.x:1.86.1And the following script to build a binary and package that to zip for deployment.

#!/bin/bash

DIR="cmd"

GOARCH=amd64 GOOS=linux go build -o build/"$DIR" "$DIR"/*

zip -jrmq build/"$DIR".zip build/"$DIR"In Rust, we use the ‘cargo-lambda’ tool to generate a release binary for the ARM architecture and subsequently package it into a zip file.

cargo lambda build - arm64 - release

zip -j ./target/lambda/concurrency_eval/bootstrap.zip ./target/lambda/concurrency_eval/bootstrapWe could use ‘cargo-lambda’ tool also to deploy our Lambda, which is a nice option when you want to quickly try out your code on AWS. But we use Terraform instead and in our terraform resources we define the AWS Lambda runtime as “provided.al2”. This is Amazon Linux provided runtime for Lambda.

Subjective Review of Go and Rust

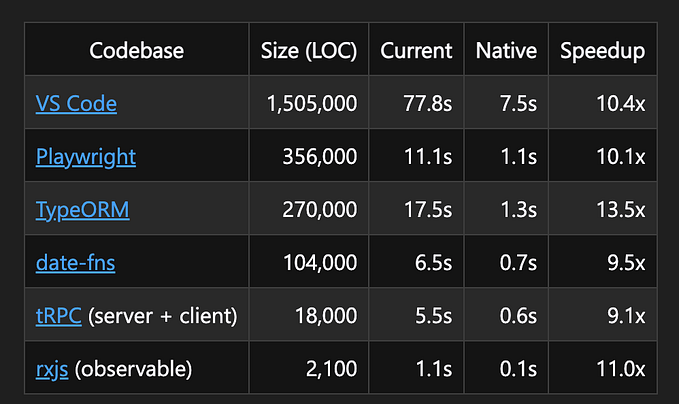

Before diving into the details of my subjective review, let’s clarify one thing related to the grading. I’m using grades from 1 to 6 where higher is better. While a grade of 6 doesn’t imply that the language is flawless in that regard, a grade of 1 doesn’t necessarily mean it’s absolutely terrible. Grading is simply a way to compare different aspects of each language.

+---------------------------------------------------+----+------+

| Category | Go | Rust |

+---------------------------------------------------+----+------+

| Code amount | 4 | 4 |

| Code complexity | 3 | 2 |

| AWS SDK developer experience | 5 | 5 |

| Code formatting and linting | 5 | 5 |

| Ease of setting up project and 3rd party packages | 3 | 5 |

| Ease of debugging runtime/compile time errors | 5 | 3 |

| Ease of creating deployment package | 3 | 4 |

| TOTAL | 28 | 28 |

+---------------------------------------------------+----+------+Both Go and Rust implementations are in the same range in terms of the code amount. But there’s significantly more code now. 155 lines in Go compared to 43 lines in Python is quite a jump.

Go’s goroutines and channels make it clean to implement concurrent logic. Go’s idioms are quite easy to learn and understand. With Rust we definitely have quite a steep learning curve. Implementing concurrent code in Rust is straightforward but everything else in Rust’s programming paradigm takes a bit of time to get familiar with.

Both languages have built-in code formatters, which is a great thing. With Go I used golangci-lint but apart from default settings didn’t explore the various configurations that further. After fighting with Rust’s compiler I almost didn’t feel the need to deal with linters in this experiment. Just out of curiosity I ran the clippy-linter with the default options and it provided some nice warnings, which resulted in a simpler code.

Rust has good tools to start a new project. You can use cargo new or cargo lambda new if you are specifically developing a Lambda function. Go doesn’t have anything similar built-in. Also dealing with Go’s directory/module structure felt a bit painful. Go’s reliance on third-party packages solely through GitHub references feels shady. Having a central package repository gives at least a feeling of trust. It almost feels like Go’s project/package management system is fragile being so lightweight and agile.

Go’s compiler is fast and provides decent help fixing the compilation issues. Rust’s compiler is notoriously strict and picky. But once the code compiled it already worked as it was meant to.

Creating a deployment package takes a bit more effort than with Python and Node.js but nothing that bad. Go loses 1 extra point because in this evaluation because there’s no ARM architecture option in AWS Lambda Go runtime.

Conclusion

Unlike Go, Rust is probably yet not that popular choice for implementing Lambda functions. I had some previous experience working with Go and would pick it over to Rust at the moment. That might change after spending some more time familiarizing with Rust’s paradigms and ecosystem. What makes Rust especially appealing are the tools (like PyO3 and rustimport) to interface with a compute optimized Rust code from Python.

These languages are considered modern performance beasts so in the final part of this blog series we’ll see they compare from the objective performance perspective.

Next in this series we cover Java and C++ implementation.

Clap, comment and share this posts as well as follow me for more upcoming interesting writes. Also, experiment by yourself with the provided code!

Links to GitHub repositories: